Introduction

The Covid-19 era has brought public safety and disease spread prevention to the forefront of both scientific and public attention. One of the most powerful weapons in mankind's arsenal against the pandemic are the various types of masks. Wearing one these days, became imperative to entering most public and private venues and on many occasions to even stepping out of the house.

Drawing inspiration from this phenomenon, Satori Analytics experimented with the development of an AI algorithm which was capable of detecting in real time the correct use of masks. Let's dive in and see a breakdown of the thought and development process behind the solution.

Defining the Problem

In every problem solving methodology the first step is to define the problem.

In this case, the algorithm developed should: Accurately detect any face and distinguish between proper and improper use (or even complete absence of) of masks, even when two or more faces are close to each other. Perform real - time mask detection, even when low end devices are used.

Defining the AI algorithm

The problem under scrutiny falls under the broad category of object detection. Object detection combines localisation and classification of one or more objects in an image. Object localisation refers to locating the presence of objects in an image and indicating their position with a bounding box, while object classification refers to predicting the type or class of an object.

Modern object detectors can be generally classified into two categories, one - stage detectors and two-stage detectors. The former performs object detection and classification in a single step thus having superior detection speed compared to two-stage methods. The most popular framework of one - shot detectors is YOLO which is an abbreviation for the term “You Only Look Once”. It can detect multiple objects in an image in real time without compromising accuracy therefore this framework is ideal for the intended solution.

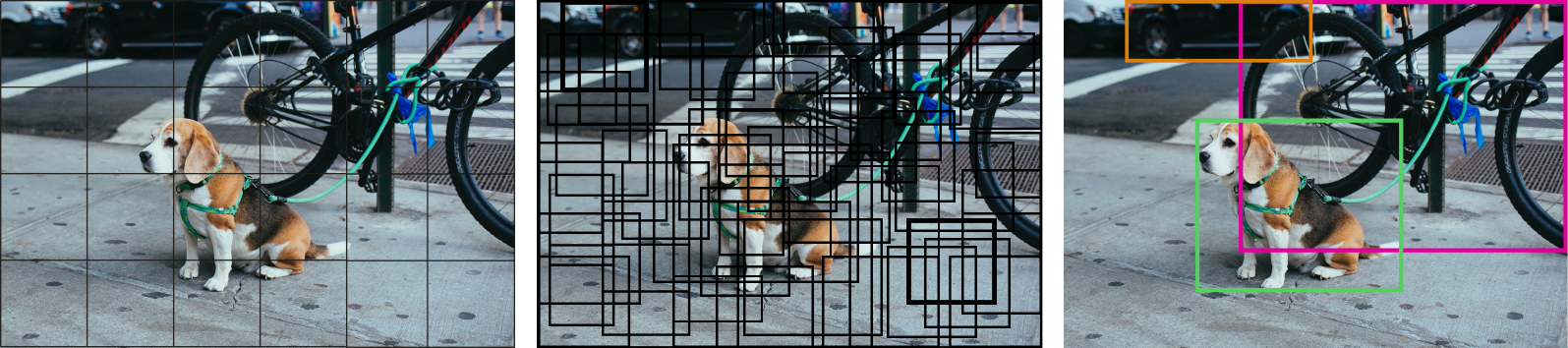

Having experimented with several detection algorithms in this project, detecting faces that were close to one another proved to be much harder than expected. Imagine a scenario under which the input image is that of multiple people being captured by the camera feed in variable distances. YOLO effectively deals with this issue by dividing the input image in n equally sized grids, each being responsible for detecting and classifying the object it contains. More specifically, each grid predicts the bounding box coordinates and dimensions, the object class and the probability that an object is present in the cell. We can imagine the bounding box as a simple orthogonal shape containing the detected object. Different sized bounding boxes are responsible for detecting different face sizes (due to proximity).The more bounding boxes we utilize, the more accurate detection can be, at the expense of speed of course.

When a face is very close to the capture device, it is possible that it will extend to multiple grid cells, resulting in multiple detections of the same object. YOLO deals with this by introducing “Non Maximal Suppression”, an interactive process that suppresses the bounding boxes with low probability scores that have the largest overlap, with the current high probability bounding box.

To put it all in perspective, for each grid cell YOLO will produce:

The ‘Non Maximal Suppression” process will keep only the most dominant ones, which will represent the final location of all faces in each video frame.

Choosing the Train Dataset

An AI system is as good as the data it is fed. So in this step, having already defined the framework to be deployed and the intended use, we were able to determine the most appropriate training set. Consequently, the open ‘Mask Dataset'' by MakeML was used for following reasons:

- It is a dataset containing people with and without masks in various backgrounds, angles and lightning conditions, thus enabling our algorithm to generalize. It also includes edge cases where the mask is worn incorrectly, which in our case were labeled as “without mask”.

- It is a pre-labeled dataset (A Pre-labeled dataset includes positional data of the true bounding boxes encapsulating with or without mask faces alongside their correct label. As a result no manual labeling was needed.)

As a next step, further training to a more diverse data set could be utilized in order to enable the algorithm to distinguish masks from other objects people tend to have or wear close to their face (scarves or even their own hands).

End Solution

Having customized and trained the AI algorithm we proceeded on building a basic application around it. The application captures live camera feed. Sequentially, it passes each frame of the feed through the YOLO framework, which in turn produces the bounding boxes around the detected faces alongside the labels “with mask” or “without mask”. In case a face not wearing a mask is detected, the application can play a pre-recorded voice message which prompts the person to wear his/her mask. The algorithm also produces a compliance score capturing the percentage of mask wearing detections during the last hour and throughout the day as total. The resulting detections and the compliance scores are visualized on the original video feed in real time as seen on visual 3. At this point it is important to note that this AI model has no memory. Thus, it can detect faces in each frame, but it cannot match the same face across frames.

Epilogue

We are not looking forward to a world where people have to wear protective masks at all times. However, if we learned one thing from the COVID-19 pandemic, it is that the possibility of an epidemic is something that we may have to face again in our lifetime.

In dire times, Data Science can help humanity stay safe, and our solution is an example of how this can be achieved.

References

- make ml, 2020: Mask Dataset, Make ML, accessed 1 October 2021, https://makeml.app/datasets/mask

- Wang, Chien-Yao and Bochkovskiy, Alexey and Liao, Hong-Yuan Mark, 2021, Scaled-YOLOv4: Scaling Cross Stage Partial Network, Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), June 2021, 13029-13038